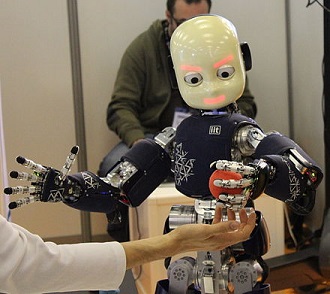

Courtesy of wikipedia.org

Kazuo Ishiguro’s latest novel, Klara And The Sun speculates on how a robot might learn to adapt to humans. The question he poses is interesting, but we humans should spend time thinking about how we ought to adapt to them. First, we should consider what we want from robots and what disruptions might they impose.

At the moment, technological advances come from innovators with dollar signs in their eyes. The results aren’t always a plus for the public. For example, look at the way Facebook has eroded personal privacy.

Despite the problems that company has caused, Facebook is working on a new idea. Programmers are designing computable wearables that allow our thoughts to interface with machines. A user no longer types a word to see it on the screen but thinks it. (“Does a Robot Get to Be the Boss of Me?” by Megan O’Gieblyn, Wired, 28.86, pg. 28-29.)

For those with limited use of their hands, the benefit is unquestionable. But can we trust Facebook to anticipate the downside to this advance?

One parameter we should consider is to what degree a human/robot interface is desirable. Take policing, for example. We use machines with good effects at times: when we need to detonate a bomb or must crawl into dangerous places. But should we create limits on their use?

Consider the Derek Chauvin case. We know a police officer kept his knee on a man’s neck for several minutes, despite the victim’s gasps that he couldn’t breathe. Ignoring those pleas, Chauvin maintained his position for nine minutes and twenty seconds. At nine minutes and twenty-one seconds, George Floyd, the man on the ground, was dead.

What shocked so many who saw the killing was Chauvin’s robotic behavior. For nine minutes and twenty-one seconds, he felt a man dying beneath his knee and showed no mercy.

Presumably, he was doing his duty. Many killers have defended their actions with a similar argument. They were soldiers at Auschwitz or Mỹ Lai. They served Andrew Jackson on the Trail of Tears. They were servants of God during the Inquisition. Or, they were patriots who employed torture in a time of war. No matter the gloss, most hearts recoil at their actions.

What we must fear is that one day the human species may employ robots to do the unthinkable? Given the right algorithm, robots can inflict pain without remorse and commit immeasurable atrocities that, because their work is impersonal, will spare their masters’ pangs of guilt–just as war pilots are spared when they drop bombs upon sleeping villages.

Why punish Chauvin for performing as some future robot might do?

We hold Chauvin accountable because he forces us to confront human nature’s dark side. We expect a robot to be devoid of feeling. We expect a human to have a scintilla of compassion. We hope that when a savage impulse overtakes us, we will reach an inflection point, a moment of grace when compassion steps upon the stage to wrestle with our demon.

If conscience fails to summon that brighter angel, we become less than robots. We become monsters.

As we build our brave new world, let us hope those better angels guide our judgments. To question, to doubt, to feel is the essence of being human. Unpredictability is our hallowed gift.

Before I created a soldier or a policeman out of bolts and wires, I’d consider the task to be served and to what degree predictability was necessary. The decision is an omniscient one that God has often failed. Throughout our history, people have walked among, behaving as if they had an inflection point, yet who in times of upheaval reveal an inhuman face. Until we understand the difference between them and us, I am in no hurry to create robots like ourselves.